| Update: answers to some questions raised below are available in a newer post. |

Fact (1):

Functions cannot accept aligned types by value. That is really a fact of nature, but it can make sense: the function’s stack frame can be located anywhere in memory, and so a hypothetical aligned argument passed by value cannot be passed at a fixed offset relative to the frame base. There would be no sane way for the function to reference that argument – unless some compiler writer out there is willing to pad every stack frame with some slack, generate function prologues that would take ebp modulo the desired alignment, fiddle with it to deduce the aligned argument location by some convention etc etc etc. Let’s not even start to imagine stack frame cleanup.

Fact (2):

Ever since MMX, and more pronouncedly since SSE, data alignment is a major, major performance boost opportunity for mathematical code. In games and game-like apps, practically every mathematical object (matrices, vectors, and thus every object that holds them as members) are aligned on 16 byte boundaries.

Fact (3):

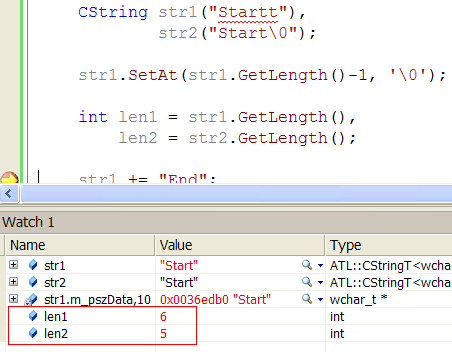

In VC++, when you try and instantiate an STL vector of aligned types you get slapped by the compiler:

error C2719: '_Val': formal parameter with __declspec(align('16')) won't be aligned

The reason is that the implementation of std::vector::resize has the signature –

void resize(size_type _Count, _Elem _Ch);

That is, it accepts an element of the contained type by value. Semantically – resize pads the existing buffer with copies of _Ch as needed to get to size _Count.

Corollary:

MS’s std::vector is pretty close to useless.

I wasn’t as decisive until recently, when several things happened: (1) I discovered gcc’s implementation does not suffer from the same issue, so it’s nothing inherent in the c++ standard. (2) I manually modified vector::resize’s signature to accept a const reference, and the code compiled cleanly and ran well. (3) Most importantly, I mailed Stephan T. Lavavej, Microsoft’s own STL grand master, and asked him whether I was missing something. His response was:

I filed internal bug number Dev10#891686 about this. There’s a reason that our resize() is currently taking T by value – it guards against being given one of the vector’s own elements (which is perfectly legal). We’ll investigate changing this in VC11, but we’d have to make nontrivial changes to resize() and possibly other places throughout <vector>.

Note that header hacking is unsupported.

Frankly, I don’t fully understand this. The only case I can see when you might need to guard against being given one of the vector’s own elements is when that element is potentially changed by resize’s padding itself. It might happen when your vector buffer holds some slack, you pass _Ch from that slack, and resize into that very slack space, e.g.:

std::vector<CWhateva> v;

v.resize(100);

v.resize(50); // v's storage capacity is still 100

v.resize(100, v[80]); // when _SECURE_SCL is off, there would be no runtime checks and this may run.

Indeed if v[80] is accepted by ref, even const, it would be overwritten in the last line. I don’t see an inherent problem here, as it seems it should be overwritten by a copy of itself – but I can understand the feeling that overwriting an object with itself might be sensitive. (anyone has a concrete example?).

Then again, maybe he meant something different altogether.

Most of all, I gotta say I was mightily impressed with the speed and seriousness of MS’s replies. I’m developing quite an email-CHUTZPA lately (more in coming posts), and am always flattered to get responses from busy people. MS does deserve some kudos there – before realizing Stephen was the right address for this inquiry I mailed the almighty Herb Sutter himself, and got similarly swift and informative responses.